Description

Detailed Parameter Table

| Parameter Name | Parameter Value |

| Product model | NVIDIA H100 |

| Manufacturer | NVIDIA |

| Product category | Flagship Data Center GPU for AI Training, Inference, and High-Performance Computing (HPC) |

| GPU Architecture | Hopper Architecture (4nm manufacturing process); supports Multi-Instance GPU (MIG) v3.0 |

| GPU Cores | 16,896 CUDA Cores; 528 Tensor Cores (4th Gen); 66 Ray Tracing Cores (3rd Gen) |

| Memory Configuration | 80GB HBM3 (High-Bandwidth Memory); 50MB L2 Cache; ECC memory support for data integrity |

| Memory Bandwidth | 3.35 TB/s (HBM3); 900 GB/s NVLink 4.0 (for multi-GPU connectivity) |

| Compute Performance | 67 TFLOPS (FP64 HPC); 3,291 TFLOPS (FP8 AI Training); 6,581 TFLOPS (FP8 AI Inference) |

| Power Consumption | 700W Max TDP; Optimized for data center power efficiency (1.4x performance-per-watt vs. prior gen) |

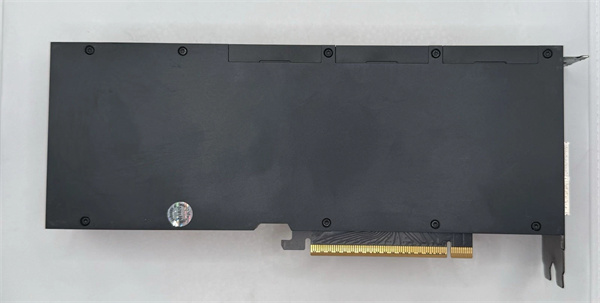

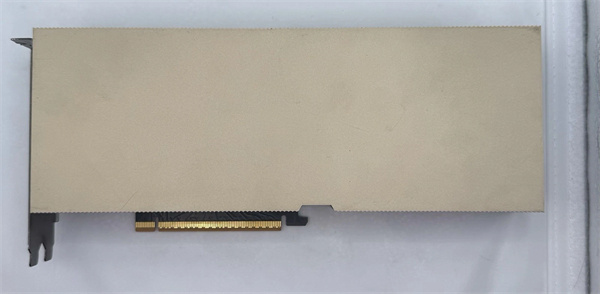

| Physical Dimensions | PCIe 5.0 x16 form factor (4.43” x 16.73” / 112.5mm x 425mm); Dual-slot cooling design |

| Software Support | CUDA 12.x SDK; cuDNN 8.x (for deep learning); TensorRT 8.x (for inference optimization); NVIDIA AI Enterprise suite compatibility |

| Connectivity | 4x NVLink 4.0 ports (400 GB/s per link); PCIe 5.0 x16 interface; Support for NVIDIA Quantum InfiniBand networking |

| Compatibility | Works with standard data center servers (e.g., Dell PowerEdge R760, HPE ProLiant DL380 Gen11); Compatible with Linux (RHEL, Ubuntu) and Windows Server OS |

| Security Features | NVIDIA Confidential Computing (hardware-enforced data encryption); Secure Boot; Firmware TPM 2.0 |

NVIDIA H100

Product Introduction

The NVIDIA H100 is NVIDIA’s flagship data center GPU, engineered to redefine performance for AI training, inference, and high-performance computing (HPC) workloads. As the cornerstone of NVIDIA’s Hopper architecture lineup, the NVIDIA H100 bridges the gap between general-purpose computing and specialized AI acceleration—delivering unmatched throughput for large language models (LLMs), scientific simulations, and real-time data analytics. Unlike its successor, the NVIDIA GH200 (which integrates additional system-level features), the NVIDIA H100 focuses on GPU-centric performance, making it the go-to choice for organizations building scalable, GPU-dense data centers.

In modern AI infrastructure, the NVIDIA H100 acts as a “performance anchor”: its 16,896 CUDA Cores and 528 4th Gen Tensor Cores handle the compute-intensive demands of LLM training (e.g., GPT-3, PaLM), while its 80GB HBM3 memory eliminates bottlenecks when processing terabyte-scale datasets. For example, a cloud service provider using the NVIDIA H100 can train a 175B-parameter LLM in weeks (vs. months with prior-gen GPUs), drastically reducing time-to-market for AI services. In HPC, the NVIDIA H100 accelerates simulations like quantum chemistry and climate modeling—delivering 67 TFLOPS of FP64 performance to tackle complex scientific challenges. Today, the NVIDIA H100 remains a staple in data centers worldwide, trusted by enterprises, research labs, and cloud providers to power the next generation of AI and HPC innovations.

Core Advantages and Technical Highlights

4th Gen Tensor Cores for Breakthrough AI Performance: The NVIDIA H100’s 4th Gen Tensor Cores introduce FP8 precision—delivering 3,291 TFLOPS of AI training performance (2x faster than the prior-gen A100). This precision balances speed and accuracy, critical for training LLMs and computer vision models. For a retail company using the NVIDIA H100 to train a product recommendation model, FP8 reduces training time by 50% while maintaining 99% of the accuracy achieved with higher-precision FP16. This efficiency lets teams iterate on models faster, improving recommendation relevance and customer engagement.

80GB HBM3 Memory for Large-Scale Workloads: Unlike GPUs with smaller memory pools (e.g., 40GB HBM2e), the NVIDIA H100’s 80GB HBM3 memory (with 3.35 TB/s bandwidth) enables end-to-end processing of large datasets without offloading to slower system memory. In a pharmaceutical research lab, the NVIDIA H100 can run molecular dynamics simulations on a 100M-atom protein structure—keeping all data in HBM3 to avoid 10x slowdowns associated with memory swapping. This capability is a game-changer for workloads where data locality directly impacts time-to-result.

NVLink 4.0 for Scalable Multi-GPU Clusters: The NVIDIA H100’s 4x NVLink 4.0 ports (400 GB/s per link) enable seamless connectivity between up to 8 NVIDIA H100 GPUs, forming a “GPU supercomputer” for distributed workloads. A university research team using 8 NVIDIA H100 GPUs linked via NVLink can run a climate simulation 8x faster than a single GPU setup—cutting the time to model a 10-year weather pattern from 8 weeks to 1 week. NVLink also simplifies cluster management, as GPUs share data directly (without relying on network switches), reducing latency and complexity.

Power Efficiency for Sustainable Data Centers: Despite its high performance, the NVIDIA H100 delivers 1.4x more performance per watt than the prior-gen A100. Its 700W TDP is optimized for dense server deployments—allowing 4 NVIDIA H100 GPUs to fit in a single 4U server (vs. 2 GPUs with less efficient models). For a hyperscaler operating a 10,000-GPU data center, the NVIDIA H100 reduces annual power costs by \(1.2M (based on \)0.10/kWh), aligning with sustainability goals while maximizing compute density.

Typical Application Scenarios

Large Language Model (LLM) Training: Cloud providers like AWS and Google Cloud use the NVIDIA H100 to power their AI-as-a-service offerings. For example, AWS’s P5 instances (equipped with 8 NVIDIA H100 GPUs) let customers train 175B-parameter LLMs in ~20 days—down from 60 days with A100-based instances. The NVIDIA H100’s FP8 precision and HBM3 memory ensure that even the largest models run efficiently, enabling customers to build custom LLMs for chatbots, content generation, and code assistance.

Quantum Chemistry Simulations: Research labs like MIT’s Department of Chemistry use the NVIDIA H100 to accelerate quantum chemistry calculations. The GPU’s 67 TFLOPS of FP64 performance lets scientists model the behavior of complex molecules (e.g., drug candidates) in hours—vs. days with CPU-only systems. For a team developing a new cancer treatment, the NVIDIA H100 reduces the time to simulate a molecule’s interaction with a protein from 48 hours to 6 hours, speeding up drug discovery timelines.

Real-Time AI Inference for Edge Clouds: Telecommunication companies use the NVIDIA H100 in edge data centers to run low-latency AI inference. For example, a 5G provider deploying the NVIDIA H100 can process 1M video streams per GPU (for real-time object detection in smart cities) with sub-10ms latency. The GPU’s TensorRT optimization further boosts inference throughput—ensuring that critical applications like traffic management and public safety run without delays.

NVIDIA H100

Related Model Recommendations

NVIDIA GH200: Successor System-on-Chip (SoC). The NVIDIA GH200 integrates the H100 GPU with additional CPU and memory, ideal for workloads needing unified system memory (e.g., large-scale AI training with multi-terabyte datasets). It replaces the NVIDIA H100 in scenarios where system-level integration is prioritized over GPU-only performance.

NVIDIA A100: Prior-Gen Alternative. The NVIDIA A100 (Ampere architecture) offers 1,950 TFLOPS of AI performance—30% less than the NVIDIA H100—at a lower cost. It’s a cost-effective choice for small-to-medium enterprises (SMEs) training smaller models (e.g., 10B-parameter LLMs) or running basic HPC workloads.

NVIDIA H100 PCIe: Low-Power Variant. The NVIDIA H100 PCIe (350W TDP) is a reduced-power version of the NVIDIA H100, designed for servers with limited power budgets (e.g., edge data centers). It retains 80% of the full-power H100’s performance, making it suitable for inference-heavy workloads.

NVIDIA DGX H100: Turnkey AI System. The NVIDIA DGX H100 is a pre-configured server with 8 NVIDIA H100 GPUs, NVLink 4.0, and NVIDIA AI Enterprise software. It eliminates the complexity of building a custom GPU cluster, ideal for research labs and enterprises new to large-scale AI.

Dell PowerEdge R760xa: H100-Optimized Server. The Dell PowerEdge R760xa supports up to 4 NVIDIA H100 GPUs, with redundant power supplies and liquid cooling to handle the GPU’s 700W TDP. It’s a reliable choice for enterprises deploying the NVIDIA H100 in production data centers.

NVIDIA Quantum-2 InfiniBand Switch: Networking Complement. The NVIDIA Quantum-2 switch (400 Gb/s per port) works with the NVIDIA H100 to build multi-node GPU clusters. It reduces network latency by 50% vs. Ethernet switches, critical for distributed LLM training.

PyTorch 2.0: AI Framework Optimization. PyTorch 2.0 includes compiler optimizations for the NVIDIA H100’s Hopper architecture, delivering 30% faster training for LLMs. It’s the de facto framework for teams using the NVIDIA H100 to build custom AI models.

NVIDIA AI Enterprise 5.0: Software Suite. NVIDIA AI Enterprise 5.0 provides enterprise-grade support for the NVIDIA H100, including pre-trained models, security patches, and 24/7 technical assistance. It’s essential for enterprises deploying the NVIDIA H100 in regulated industries (e.g., healthcare, finance).

Installation, Commissioning and Maintenance Instructions

Installation Preparation: Before installing the NVIDIA H100, verify that the server supports PCIe 5.0 x16 (required for full bandwidth) and has a 700W+ power supply per GPU (with 8-pin or 12VHPWR connectors). Use an anti-static wristband and mat to prevent ESD damage to the GPU’s 4nm circuitry. Gather tools: Phillips screwdriver (for securing the GPU), thermal paste (if replacing the cooler), and a torque wrench (to avoid over-tightening screws). Ensure the server’s cooling system (air or liquid) can handle the NVIDIA H100’s 700W TDP—install additional fans or liquid cooling loops if needed. Avoid installing the NVIDIA H100 near server components that generate excessive heat (e.g., CPUs, hard drives) to prevent thermal throttling.

Maintenance Suggestions: Monitor the NVIDIA H100’s health using NVIDIA System Management Interface (nvidia-smi) or NVIDIA DCGM (Data Center GPU Manager). Check for temperature spikes (keep under 85°C) and memory errors (ECC will log corrections). Clean the GPU’s cooler every 3 months—use compressed air to remove dust from fans and heat sinks (avoid liquid cleaners). Update the NVIDIA H100’s firmware and drivers monthly (via NVIDIA Enterprise Support) to access performance improvements and security patches. If a GPU fails, use NVIDIA’s Fault Injection Test (FIT) to diagnose issues—replace the GPU if hardware defects are detected. For multi-GPU clusters, run monthly NVLink connectivity tests to ensure data transfer speeds meet 400 GB/s per link.

Service and Guarantee Commitment

NVIDIA backs the NVIDIA H100 with a 3-year limited warranty, covering manufacturing defects in materials and workmanship. Each NVIDIA H100 undergoes rigorous testing before shipment, including 48-hour stress tests (AI training and HPC workloads), thermal cycling (-40°C to 85°C), and ECC memory validation—ensuring compliance with NVIDIA’s strict quality standards.

Our 24/7 global technical support team provides assistance with NVIDIA H100 installation, driver configuration, and workload optimization. For enterprise customers, NVIDIA offers Premium Support—including on-site service within 4 hours (in major metropolitan areas) and dedicated technical account managers. We also provide customized training (e.g., “H100 Optimization for LLMs”) to help teams maximize the GPU’s performance. For customers upgrading to the NVIDIA GH200, NVIDIA offers trade-in programs to offset costs and ensure a seamless transition. With a global network of authorized service centers, we minimize downtime for NVIDIA H100 deployments—keeping your AI and HPC workloads running without interruption.